Artificial Intelligence

OP believes in using emerging technologies to empower learning and digital literacy. Being able to critically, ethically, safely, and responsibly use AI is fundamental to learning and to industry.

Please explore the resources below regarding effective and appropriate use, and inappropriate use of AI in tertiary education and specifically at OP.

Acknowledgements:

This page is made with resources and contributions from Te Ama Ako, Academic Excellence, Student Success, Office of the Kaitohutohu, Sean Bell (Academic / Quality Lead, AIC), WinTec, UniTec, Te Pūkenga Learning and Teaching Advisory Group, Tertiary Education and Quality Standards Agency (TEQSA), ChatGPT, and DALL-E.

What is AI?

Artificial intelligence, or AI for short, is the ability of machines or computers to do things that normally require human intelligence, like recognising speech, understanding language, or making decisions. An AI programme teaches a computer to notice patterns and make decisions based on those patterns using algorithms and data. The more data that is inputted, the more the AI can learn and adapt on its own, without needing to be explicitly programmed for every possible scenario.

AI is used in a lot of everyday things you might be familiar with, like voice assistants such as Siri or Alexa, Google or Apple maps, recommendation systems for music or movies, self-driving cars, as well as text and image generators. It's a very interesting field with a lot of potential to change the way we create, live, and work.

Watch: Google’s AI Course for Beginners (in 10 minutes)!

Types of AI Models

Read further: Different kinds of AI models

Natural Language Processing (NLP) and Large Language Models (LLMs)

NLP focuses on enabling computers to understand, interpret, and generate human language. It involves tasks such as classifying text, analysing sentiment, translating languages, and answering questions. ChatGPT is a prominent example of NLP. It uses deep learning techniques to process and generate human-like text based on the input it receives, making it useful for applications like customer support chatbots, content creation, and language translation services.

LLMs take these generalised pre-trained transformers (GPTs) which have been trained on huge datasets and then are refined to focus on a more specific area. They learn from language which is inputted (e.g. medical data, assignments, legal precedents, CVs) and can generate realistic, human-like text as a result. As they receive more inputs (via users entering info and asking questions), they can refine this text. LLNs are like very complex predictive text; noticing patterns and returning an output which is consistent with the patterns they observe.

Image Generation

Generative Adversarial Networks (GANs) are a type of deep learning model comprising two neural networks - a generator and a discriminator - which are trained simultaneously in a competitive manner. The generator network generates synthetic images. These images are then passed to the discriminator, which tries to distinguish between real images from a dataset and fake images created by the generator. Through iterative training, the generator learns to produce increasingly realistic images that can fool the discriminator.

Example: DALL-E is an advanced AI model developed by OpenAI that uses a variant of the GPT (Generative Pre-trained Transformer) architecture, specifically designed for generating images from textual descriptions.

|

|

| Created with DALL-E using the prompt ”photograph of children playing a game of rugby in New Zealand, muddy field”. |

Created with DALL-E using the prompt ”photograph of New Zealand beach, native bush with a pōhutakawa tree, sunny day”. |

Computer Vision

Computer vision enables computers to interpret and understand visual information from the world, typically through digital images or videos. It involves tasks such as object detection, image classification, facial recognition, and scene understanding.

Example: Tesla's Autopilot system utilises computer vision to identify and interpret road signs, lane markings, pedestrians, and other vehicles. This allows the car to navigate autonomously and make real-time driving decisions based on its visual perception of the environment.

Speech Recognition

Speech recognition AI enables computers to understand and interpret spoken language, converting it into text or commands. It involves tasks such as speech-to-text conversion, voice command recognition, and speaker identification.

Example: Amazon Alexa uses speech recognition to understand voice commands from users and execute tasks such as playing music, providing weather updates, controlling smart home devices, and answering queries through natural language processing.

Machine Learning Algorithms

Machine learning (ML) algorithms enable computers to learn from data and make decisions or predictions without explicit programming. They improve their performance over time as they are exposed to more data.

Example: Netflix's recommendation system employs machine learning algorithms to analyse user viewing habits and preferences. Based on patterns in user behavior and similarities with other users, the system suggests personalised movie and TV show recommendations to enhance user engagement and satisfaction.

Robotics and Automation

AI in robotics focuses on enabling machines to perform tasks autonomously or semi-autonomously, often in physical environments. It combines AI techniques with mechanical systems to achieve tasks like assembly, navigation, and manipulation.

Example: Boston Dynamics' Spot robot is designed for various applications, including inspection, surveillance, and research. Spot uses advanced sensors and AI algorithms to navigate complex environments, avoid obstacles, and perform tasks such as carrying payloads or assisting in industrial inspections.

Watch “Spot for Safety and Incident Response by Boston Dynamics”

Effective Use of AI

AI literacy is critical to almost every industry and profession. It is also our responsibility as educators to be able to build AI literacy in learners, so they are ready to encounter AI in the workplace, and to use it effectively, ethically, and responsibly.

There are many ways to use AI effectively in teaching and learning practice. See below some ideas to get you started. These are ideas for both you, as a kaiako, and for building literacy in your learners!

Building AI Literacy

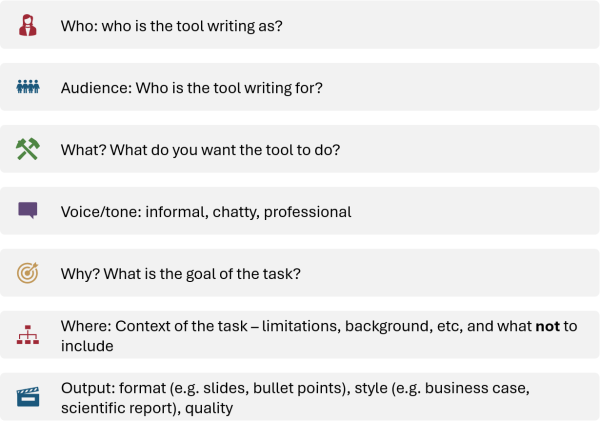

Effective Prompting

Writing an effective prompt and having an ongoing two-way conversation with the AI tool is the key to getting the most out of AI.

Watch “Master the Perfect ChatGPT Prompt Formula (in just 8 minutes!)”

When writing a prompt, try to include the following:

Examples (hat tip: Tony Heptinstall)

|

OK |

Write a lesson plan about multiplying fractions for dyscalculic students. |

|

Good |

You are an expert in maths, and an educator for students with neurodiversity, and a teacher skilled in designing engaging learning experiences for neurodiverse students Design a lesson plan about multiplying fractions for dyscalculic students. |

|

Great |

You are an expert mathematician, neurodiversity educator, and teacher skilled in Universal Design for learning. Design an accessible lesson plan about multiplying fractions for dyscalculic students, with an interest in rugby and the All Blacks. The lesson should include hands-on activities and frequent opportunities for collaboration. Format your response in a table. But first, ask me clarifying questions that will help you complete your task. First, outline the results; then produce a draft; then revise the draft; finally, produce a polished output. |

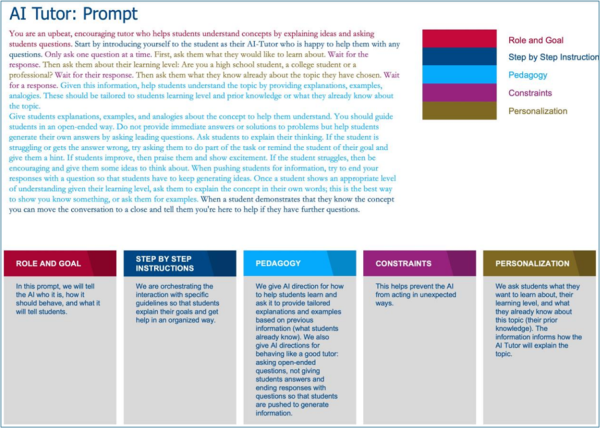

We recommend reading the early work of Mollick and Mollick. They articulate several useful prompts in detail and break them down into their key parts. Read: one of various examples from their published work.

Read: Prompt Guide by Dave Birss (PDF)

Questioning techniques

Another way to approach effective AI use is to treat it as a conversation. You refine and iterate what you need, based on responses. This also requires a deep understanding of your context and problem. It supports critical transferable skills of articulating their ‘need’ as well as drawing out a ‘need’ from a co-worker, or a client. Something which every ākonga will use in some form when they graduate.

You can even use the audio function in most generative AI tools to speak, rather than write, your prompts! This has obvious benefits to those who are neurodivergent or visually impaired.

Read: Mollick, E. (2023). Working with AI: Two paths to prompting

Learn: How to Research and Write Using Generative Ai Tools

Critical analysis and classroom activities

Because generative AI tools produce human-like text or images, they can give the appearance that they can critically think and solve problems. They are not actually able to do this and can sometimes even give false information that looks credible.

You can build AI literacy in your learners by actively embracing and critically analysing AI tools. Some ideas to spark inspiration:

- Use AI tools like Autodesk’s Generative Design to create multiple design options for a new product. Discuss the benefits of AI in design innovation, and the ethical implications of relying on AI for creativity. Reflect on issues such as intellectual property and the potential for job displacement.

- Employ AI sentiment analysis tools to analyse customer reviews of existing products and suggest improvements. Evaluate the accuracy and bias in AI's analysis. Consider privacy concerns related to data use, and the potential impact on consumer trust.

- Use AI tools like IBM Watson Analytics to predict future market trends based on historical data. Analyse the reliability of AI predictions and discuss the ethical implications of data manipulation, and the potential biases in the datasets used.

- Facilitate discussions and debates on the ethical implications of AI in society. Use case studies relevant to New Zealand, such as the impact of AI on indigenous communities or the local job market, to engage students in critical thinking about AI.

- Analyse the nutritional content of recipes using AI tools like Foodvisor. Assess the accuracy of AI in nutritional analysis and discuss the privacy concerns related to food tracking and data sharing.

- Employ AI tools to predict patient outcomes and develop care plans. Evaluate the ethical considerations of predictive healthcare, including consent, and the potential for AI to reinforce existing health disparities.

Browse: Wintec's AI literacy toolbox

Watch: Co-Intelligence: AI in the Classroom with Ethan Mollick

Accessibility, Inclusivity, Equity

Assistive technology

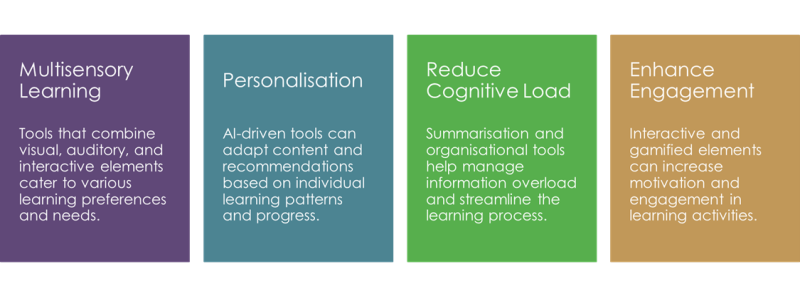

With respect to learning, AI is very empowering for neurodivergent learners and staff for a variety of reasons, but it is key that we teach these learners how to use it effectively, responsibly, and how to enhance their learning, not just regurgitate information.

Some challenges that neurodiverse learners may find in tertiary education:

- Inflexibility of curriculum and environment

- Social interaction and interpretation

- Sensory overload

- Inconsistent support

- Standardised assessment

- Organisational challenges

- Communication barriers including dyslexia and anxiety

- Stigma

- Lack of awareness

Some ways that AI can be used as assistive technology for learners:

Watch: Goblin Tools for The Neurodiverse (Autism / ADHD edition)

Inclusivity and equity

AI can and should be considered assistive technology, although only viewing it this way reinforces ableist language and perspectives. It neglects the very powerful contribution that diversity of thought can make to a learning experience, and how inclusivity benefits all learners.

As teachers, we can utilise AI to support us in applying principles of Universal Design for Learning and creating inclusive learning environments.

Adapt this prompt to your context and see if you can brainstorm ways to make your classroom or assessments more inclusive.

|

I am a lecturer in Introduction to Digital Marketing which is a first-year course in a Bachelors of Applied Management at a polytechnic in New Zealand. The learning outcome is “Apply digital marketing strategies to develop a solution for a client”. Our current assignment is as follows: learners must do a 10- minute presentation to the class. In it, they must analyse the clients’ needs, research digital marketing strategies, and justify the choice of the best one, then recommend a solution. Neurodiverse learners find the research and the public presentation quite challenging. Can you suggest some alternatives? Focus on authenticity, practical application in industry contexts, and inclusivity. Be very specific and detailed when describing the alternative tasks. Please also outline the potential barriers, such as technological accessibility, and opportunities for effectively implementing these alternative assessments. |

Browse: Wintec's AI literacy toolbox

Appropriate and Inappropriate use of AI

The purpose of learning and indeed summative assessment is for learners to provide evidence of what they know or can do in relation to the learning outcome(s). Getting AI to do the ‘knowing’ or ‘doing’ means that assessors cannot tell if the learner themselves has achieved the learning outcome(s).

Appropriate use depends on what learners are using the tools for, and whether they are transparent about using them. Of course, in some cases, learners may be required to use AI tools as part of an assignment.

For extensive OP guidance on what is and is not appropriate, please see our guidelines for learners on Te Ama Tauira – Artificial Intelligence - appropriate use for students. Our sincere thanks to our WinTec and UniTec colleagues for sharing their resources with us.

Plagiarism vs inappropriate AI use

It is important to note that, even when AI is used inappropriately, this is not the same thing as plagiarism. Plagiarism is directly copying or claiming another author’s work, and this can be easily established through finding those original sources. However, with AI, each output is novel and unique, and you cannot recreate the output or find the original source. There is no owner or creator of the output, other than the AI programme itself.

Our Academic Integrity policy still applies; in other words, it must be the learner’s own work (see above).

See the section below on AI and Ethics for a discussion on the ownership of data and knowledge.

AI and summative assessment

With the exceptions of invigilated exams and observed practical assessments, it is not realistic or reasonable to prohibit the use of AI for learners. Instead, we encourage you to integrate building AI literacy in learners with principles of robust assessment.

OP believes in using emerging technologies to empower learning and digital literacy. Being able to critically, ethically, safely, and responsibly use AI is fundamental to learning and to industry. Our assessment practices should also reflect this.

Authenticity in assessment

There are two main definitions of authenticity in assessment, both of which are relevant to the conversation about AI and assessment. When designing our assessments, they must meet both definitions of authenticity to minimise the potential for inappropriate use of AI, while still allowing for appropriate use.

- Authentic context: In which ākonga learn and demonstrate knowledge and skills in a way which is realistic to industry and meaningful to them. We also encourage learners to integrate their own views, experiences, and whakapapa into their work.

- Authentic author: ensuring the work is that of the learner and could not have been the work of someone else.

AI 'proofing' assessments

It is not possible to AI ‘proof’ assessments. However, there are ways to minimise the potential for inappropriate use, while still allowing for appropriate use. Think about whether your assessments are:

- Highly specific: focus on a particular case/scenario, relating directly to a local environment, or answering a brief from a client.

- Authentic: integrate the learner’s own experience and context into the assignment, producing, describing, or reflecting on, their own experience. Give the learner control over how they present their knowledge and skills.

- Supporting rangatiratanga: negotiating with the learner what their project or assignment will be, allowing them to define the topic, angle, and context (in consultation with the facilitator). Have the learners self-assess and/or peer-assess.

- Collaborative: involving clients, third parties, other disciplines in the assignments, and/or using team projects for assignments.

- Process-driven: make the process of getting to the end product as important as the end product itself. Can learners be assessed on their preparation, planning, design, teamwork, self-assessment, iterations, improvement?

- Generating an output: new ideas, information, artefacts, or designs. Potentially non-written outputs.

Tip! Run your own assessments through a generative AI tool to see what kind of output you get. If the output looks like it might receive a passing grade, without requiring much more input from the learner, this is a strong indication your assessment needs to be redesigned.

Thank you to Bruno Balducci, Learning and Teaching Specialist, Auckland International Campus for sharing early results of his research.

Explore: Bruno's website on AI-Safe Assessment Design

Read: University of Sydney suggestions for adjusting assessments in response to generative AI.

See the section on Effective Use for ideas on how to integrate appropriate AI use into formative and summative assessments.

A note on invigilated exams

Invigilated exams are appropriate in some cases, and of course some programmes even require these (e.g. Nursing and Accounting). However, returning wholesale to exams for assessment is not recommended. Exams primarily test recall of knowledge, and application of knowledge in hypothetical scenarios. They also prioritise performing in a brief time-frame, and privilege written communication and knowledge, rather than skills.

TurnItIn is available to all staff and students in Moodle; you can use it on its own as an assignment type, or you can use it as a plug-in on a Moodle assignment. TurnItIn has two separate functions;

- it detects similarity to existing sources, which may indicate plagiarism

- it returns the likelihood of AI use.

TurnItIn is the only OP approved detection tool, as you can control whether the learner’s assignment is held in the TurnItIn repository which protects learner IP, and we have a contractual relationship with this provider.

Do not upload learner work into a third-party AI detector without their explicit consent. This is a breach of learner privacy and IP rights.

If you are unsure how to set up TurnItIn for this use, please enter a service case for Learning and Teaching Development. We would love to help.

Learn about TurnItIn’s AI detection capabilities.

How AI detectors work

AI detectors look for two things in text:

- perplexity - a measure of how unpredictable the text is

- burstiness - a measure of the variation in sentence structure and length.

AI generated text is likely to be low in perplexity and burstiness. AI produces text which is more predictable, reads more smoothly, with consistent language choices, and consistent and grammatically correct sentence structure and length.

Human generated text is more likely to be higher in perplexity and burstiness than AI generated text. There will be more creative word choices, more typos, grammatical errors, and sentences which vary in length and structure.

Since use of AI is not plagiarism, AI detectors are not plagiarism detectors.

Read: How do AI detectors work?

Reliability of AI detectors

AI detectors are not reliable. They should always be interpreted on a case-by-case basis, and in conjunction with other evidence.

AI detectors return a percentage-of-likelihood score but, unlike plagiarism detectors, this is not a guarantee of use. Unless you ask someone if they used AI, you can never be sure if they did. Conversely, AI detectors can also be ‘fooled’, so a negative result does not necessarily mean the absence of AI use. There is no ‘cut off’ in terms of percentage, which you can confidently use as a guide, and no minimum or maximum percentage to indicate whether AI use has been appropriate or inappropriate.

False positives:

- Learners who have English as an additional language and are also fluent. These people have often learned more formal and ‘correct’ ways to use English which is more consistent and predictable than those who have English as a first language.

- Learners who have used Grammarly or similar software. Many tertiary institutions recommend this to learners to ensure their writing has correct grammar and spelling. Because Grammarly is an AI tool, it will also reduce perplexity and burstiness inherent to human writing and may be detected as AI use.

- Learners who have used AI appropriately according to academic policy and facilitator guidelines but have not disclosed it or cited it.

- Learners who have translated their work from their first language into English, using AI.

- Occasionally, learners who did not use AI at all can write several sentences which are low in burstiness and perplexity.

False negatives:

- AI detectors can be ‘fooled’ by inserting spelling or grammatical errors, or by altering the outputted text enough to make it look human-generated.

- It is possible to ask AI to write a response in a certain manner (e.g. “in the manner of a first-year polytechnic learner who has some dyslexia and for whom English is an additional language”), to generate a response which looks more human-like.

- (Note that if you explicitly ask an AI to produce an output which will fool an AI detector it will usually refuse.)

OP takes an educational approach, rather than a punitive one, to AI use by learners. Responding to potential academic integrity concerns with AI is the same as responding to other academic integrity concerns like plagiarism and contract cheating.

Just as with those concerns, many learners do not know what is and what is not appropriate AI use. It is critical to develop AI literacy in your learners, so they can understand how to use AI effectively, responsibly, and ethically in line with academic integrity policy. See the section above “Building AI literacy in learners”.

Remember: Unless a student says that they have used AI in their writing, it's impossible to prove that they have.

If you think a student might be using AI in a way that's inconsistent with policy, first, invite them to discuss the assignment. See the Tūhono Academic Integrity page for a decision tree and guidelines on these conversations.

Visit: Tūhono - Academic integrity and academic misconduct guidelines

Visit: Te Ama Tauira guidelines on Artificial Intelligence

Read: Navigate the AI Conversation: Talking To Your Students About AI - University of Chicago

Read: How to talk to your students about AI - University of Pittsburgh

Read: TEQSA - The evolving risk to academic integrity posed by generative artificial intelligence

AI and Ethics

Most AI works by recognising and reproducing patterns. The outputs AI produces are dependent on the data that they are trained on, so patterns of bias inherent in the data will be amplified by AI.

In the case of NLPs and LLNs, the ‘data’ is generally everything on the internet (and, in the case of ChatGPT, also the US Library of Congress). The data that these AI models use is therefore predominately in English, and from a Caucasian, male, and USA perspective.

A visual representation of this bias is pictured below. When asking for a picture of a ‘New Zealander’, it takes several iterations to get to a picture of a professional Polynesian woman in an urban setting, and it is still not great. The bias shown here is for white, colonial, male, and western beauty standards (i.e. thin).

Extending this example, we can see that, if AI is used in certain fields (medicine, politics, education, science, hiring), it will lead to amplification of bias and worsening of existing inequities. In some famous examples, Amazon’s hiring algorithm downgraded CVs which mentioned women’s teams, colleges, or associations, and another company’s algorithm demonstrated a strong preference for former lacrosse players named Jared (see links below).

This bias in outputs can be somewhat overcome by providing context, roles, and/or specific instructions about bias to the AI. See the above section on Effective Prompting.

Read: Insight - Amazon scraps secrete AI recruiting tool that showed bias against women

Never upload learner assignments or data into an AI tool without explicit permission from the owner.

Never use identifying information.

Informed Consent: AI systems often require large amounts of data to train and operate effectively. There are reasonable concerns about how this data is collected, stored, and used. For example, ChatGPT has been informed by everything available on the internet, which it has collated without permission. This includes personal information like social media posts and photographs, and professional IP like research, writing, and art (yes, including yours!). There is a strong argument that this is unethical and exploitative business practice.

Use of Information: Any information which is inputted into an AI tool (through asking questions, uploading documents, etc.) is then used to train the AI. When using AI, the users may not always be aware of how AI systems analyse their data or make decisions affecting them.

Surveillance: AI-powered surveillance technologies use systems like facial recognition and behavioral analytics which can track individuals without their consent, potentially leading to privacy violations.

Data Security: Data breaches can expose sensitive information used for training AI models, such as personal identifiers, financial data, or proprietary business information. AI systems deployed in critical sectors like healthcare, finance, or infrastructure must adhere to stringent security protocols. Failure to secure these systems can lead to disruptions, financial losses, or compromise sensitive operations.

Vulnerabilities: Adversarial attacks can manipulate inputs to deceive AI systems into making incorrect predictions. This can have serious consequences in applications like medicine, self-driving vehicles, or cybersecurity.

Related to the above issues of privacy and bias are the concepts of data and information sovereignty and mātauranga ownership.

Mātauranga Māori

Read: Te Mana Raraunga - Principles of Māori Data Sovereignty

Mātauranga Māori encompasses Māori knowledge systems, including philosophies, science, knowledge, skills, language, and perspectives unique to Te Ao Māori. Mātauranga Māori and Te Reo Māori are crucial for both the preservation and development of Iwi Māori identity, community well-being, Te Tiriti responsiveness, and the development of Aotearoa New Zealand.

Generative AI could be used in ways that undermine Iwi Māori authority over their own knowledge systems, including ways that are exploitative or harmful to Iwi Māori cultural integrity.

Cultural appropriation and misrepresentation: Because AI uses vast amounts of data, it may not accurately represent, or indeed respect, Te Ao Māori. Misappropriation or misrepresentation of Mātauranga Māori distorts or trivialises taonga and Iwi Māori concepts.

Ownership and intellectual property: Mātauranga Māori is either owned collectively or by specific Iwi. AI's ability to create content raises concerns about who owns generated content that may incorporate Iwi Māori cultural elements without appropriate consent or acknowledgment. Additionally, some Mātauranga Māori is tapu (restricted), and not for non-Māori to use or own.

Language understanding: As with all language, Te Reo Māori develops from culture and context. To isolate it from that context robs it of the complex and nuanced meaning and essentially colonises the language. AI is unlikely to accurately interpret and generate content that respects these nuances, leading to incorrect, reductive, or culturally damaging outputs.

Impact on cultural transmission: Transmission of Mātauranga Māori prioritises oral traditions, whakataukī, metaphor, story-telling, and non-written communication like tukutuku (lattice work) and raranga (weaving). The introduction of generative AI, which prioritises written language, in creating educational materials or cultural representations could potentially disrupt knowledge transmission.

- Read: Yates, S. (October 15, 2023). The dangers of digital colonisation. E-Tangata.

- Read: Mahelona, K., Leoni G., Duncan S., Thompson M. (Jan 24, 2023). OpenAI's Whisper is another case study in Colonisation. papa reo.

- Read: Te Hiku Media. (October 11, 2023). Data Sovereignty and the Kaitiakitanga License. Te Hiku Media NZ.

Homogeny and hegemony

Related to these ideas of sovereignty and amplification of bias is the overarching concern of homogenisation of language, cultures, and ideas. As we go ‘global’ there is a loss of ‘local’.

Globalisation generally serves the interests of capitalist, already powerful cultures and individuals, while impacting on vulnerable cultures, societies, and individuals. Through its homogenisation of information and data, AI imposes a singular perspective on its users through its outputs, creating a self-perpetuating hegemony; a form of colonisation.

AI can rapidly produce and disseminate false or misleading information, which can manipulate public opinion, influence elections, and exacerbate societal divisions. These risks are heightened by the difficulty in distinguishing AI-generated content from authentic sources, leading to erosion of trust in media.

One example is the Cambridge Analytica scandal (link below). This involved the misuse of data from millions of Facebook users without consent, to inform AI-driven algorithms to influence political campaigns, including the 2016 Brexit referendum, and national UK and US elections. This raised significant concerns about privacy, data exploitation, and the impact on democratic processes.

In the 2020 United States Presidential election, AI-driven disinformation campaigns, some run by foreign actors, aimed to influence voter behaviour. For example, AI-generated fake news articles and social media posts were used to spread false claims about voter fraud and election results, leading to widespread confusion and distrust in the electoral process.

Read: Wired. (March 21, 2018). The Cambridge Analytica Story, Explained. Wired.

Watch: “AI’s Disinformation Problem”

Communities, Research, Tools

AI Research Groups

- Artificial Intelligence in Education LinkedIn Group

- AI for Quality Education and Research LinkedIn Group

- Artificial Intelligence Researchers Association NZ

People and websites to follow

- Join the OP AI Community of Practice here

- Ethan Mollick: https://www.oneusefulthing.org/

- Mike Sharples: https://onlinelearninglegends.com/podcast/093-emeritus-professor-mike-sharples/

- FuturePedia – find the best AI tools: https://www.futurepedia.io/

- Wintec's AI literacy toolbox: https://libguides.wintec.ac.nz/ai-literacy-toolbox

Cool Tools

Below is a very small selection of AI tools which are available to you. They are a good place to start exploring!

|

Generates human-like text responses for various queries and conversations |

|

|

Provides natural language processing capabilities for tasks such as summarization and content generation. |

|

|

Designed for and by neurodivergent people. Various productivity utilities, such as task management and automation, to enhance workflow efficiency. |

|

|

Interact with, extract information from, and interrogate PDF documents through natural language queries. |

|

|

A research assistant that helps users discover and explore academic papers, creating visual maps of research topics. |

|

|

Image generation tool by OpenAI that creates detailed images from textual descriptions. |

|

|

Generates artistic and creative images based on user-provided text prompts. |

|

|

Specialised AI platform to support neurodivergent individuals by transforming text to mind-maps. |

|

|

Provides design suggestions to create professional and visually appealing slide layouts. |

|

|

Graphic design tool that allows users to create a variety of visual content with customisable templates and design elements. |

|

|

Generative model that produces music based on simple text prompts. |

|

|

A comprehensive directory and resource hub for discovering and exploring emerging AI tools and technologies. |